December 12, 2017

Rod Little Co-Author of Nature Article on Redefining Statistical Significance

In a recent article, Redefine statistical significance, in Nature, one of the most highly-cited scientific publications worldwide, SRC Faculty Member Rod Little was part of a large team of co-authors that argues for the development of a new standard for statistical significance related to claims of new scientific discoveries (rather than reproduction of existing findings). In short, this team of co-authors argues that in many settings where p < 0.05 is the current standard for evidence of a new finding, including many of the social sciences, a threshold of p < 0.005 is more appropriate.

In a recent article, Redefine statistical significance, in Nature, one of the most highly-cited scientific publications worldwide, SRC Faculty Member Rod Little was part of a large team of co-authors that argues for the development of a new standard for statistical significance related to claims of new scientific discoveries (rather than reproduction of existing findings). In short, this team of co-authors argues that in many settings where p < 0.05 is the current standard for evidence of a new finding, including many of the social sciences, a threshold of p < 0.005 is more appropriate.

The authors begin by addressing the current crisis of lack of reproducibility in science, which could be due to misuse of certain indicators of statistical significance such as the p-value. The authors have a simple premise: 'â¦statistical standards of evidence for claiming new discoveries in many fields of science are simply too low.' They indicate that continued use of a 'default' standard of p < 0.05 likely results in too many false positive findings, and that such a threshold should now be referred to as 'suggestive' of an important finding.

The first major section of the article reviews the correct interpretation of p-values, and discusses the attractive properties of presenting evidence against a null hypothesis in terms of a Bayes factor and the estimated prior odds of an alternative hypothesis being true (relative to a specified null hypothesis). The authors speak to the relationship between the p-value and the Bayes factor in selected settings, presented compelling evidence of a choice of p < 0.05 corresponding to 'weak' or 'very' weak evidence in terms of Bayes factors. While nothing is said about how exactly Bayes factors might be computed in practice for those unfamiliar with the topic, the authors provide several relevant references on the topic.

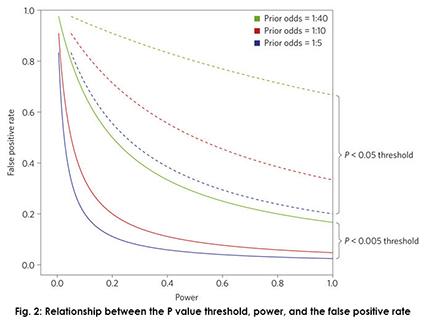

The second major section of the article addresses the 'Why 0.005?' question. First, the authors again refer to the relationship between p-values and Bayes factors in selected hypothesis testing settings, and indicate that a choice of 0.005 would correspond to 'substantial' or 'strong' evidence in these settings, following conventional classifications of Bayes factors. Second, the authors show how this choice of a default threshold for significance would substantially reduce false positive rates, especially in studies with lower statistical power. Finally, the authors cite some recent studies that have demonstrated high potential gains in rates of reproducibility that would come from using this threshold.

I appreciated that the final 'major' section of the article presented reasonable objections to this choice of a new threshold, including increasing false negative rates. While this new threshold would require a necessary increase in the sample sizes of existing studies for a given choice of statistical power, the authors argue that there would be a benefit in terms of resources saved from studies that would no longer be conducted under false premises. Other objections include the fact that this procedure would not stop multiple testing or 'p-hacking' (a point with which the authors fully agree), thresholds for significance may vary for different scientific communities, and the fact that the use of p-values should cease all-together in favor of other measures of evidence such as effect sizes. The authors acknowledge that there is not yet widespread consensus that significance testing based on pÂ-values should be eliminated completely, and conclude by saying that journals can help with this transition to a new standard of evidence, given the shortcomings of the old (and indeed arbitrary) standard.

While I would have liked a little bit more information (or references) for practitioners about how Bayes factors should be computed in practice (again for those unfamiliar with the topic) and where estimates of prior odds in favor of an alternative hypothesis might come from in different fields, this short article still makes a compelling argument in favor of a new standard for statistical significance if various fields are going to continuing using p-values as an important measure of new scientific evidence.

This article was published in Nature:

Benjamin, Daniel J., Berger, James O., et al. (2017). Redefine statistical significance. Nature.