August 17, 2017

Sunghee Lee and Other SRC Researchers Assess the Total Survey Error in Respondent Driven Sampling

In a recent publication in the Journal of Official Statistics, Sunghee Lee and her SRC colleagues Tuba Suzer-Gurtekin, James Wagner, and Rick Valliant applied the well-known Total Survey Error (TSE) framework to further understand potential sources of error in estimates produced by Respondent Driven Sampling (RDS). The various sources of error in RDS estimates have not been studied with this level of conceptual rigor before, and this article provides an important contribution to the literature in this area with several important take-away points for researchers using this type of non-probability sampling (which is often used to sample hard-to-reach populations). Researchers using RDS often make strong assumptions about the nonresponse and measurement errors (or lack thereof) associated with this technique, and this article provides important empirical evidence about the magnitudes of these types of errors.

In a recent publication in the Journal of Official Statistics, Sunghee Lee and her SRC colleagues Tuba Suzer-Gurtekin, James Wagner, and Rick Valliant applied the well-known Total Survey Error (TSE) framework to further understand potential sources of error in estimates produced by Respondent Driven Sampling (RDS). The various sources of error in RDS estimates have not been studied with this level of conceptual rigor before, and this article provides an important contribution to the literature in this area with several important take-away points for researchers using this type of non-probability sampling (which is often used to sample hard-to-reach populations). Researchers using RDS often make strong assumptions about the nonresponse and measurement errors (or lack thereof) associated with this technique, and this article provides important empirical evidence about the magnitudes of these types of errors.

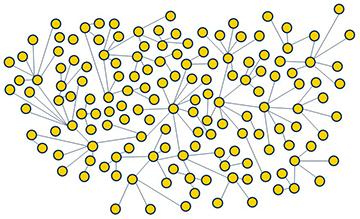

The authors begin by providing a nice overview of the basics of RDS for those who may not be familiar with the technique, focusing on key recruitment concepts (such as recruitment coupons, which are required for study participation) and the importance of social networks for the technique. They also discuss how estimators are formed based on the RDS design, and the importance of the sizes of social networks for those estimators (interestingly, the only estimators proposed in this literature have been descriptive in nature, and estimators of parameters like regression coefficients have not yet been clearly defined). Next, they review some of the key assumptions underlying estimation based on this technique, and why some of these assumptions might be unreasonable in practice. Finally, they apply the TSE framework to RDS, and discuss the various sources of error that might arise when applying RDS in real data collections.

They then present the results of analyses of two publicly available data sets from studies that used RDS to collect information on persons at risk of HIV, and focus on the nonresponse error arising from the recruitment process in addition to measurement error in reports of network size. Importantly, these two public use data sets contain coupon distribution information from the recruitment process, which is essential for studying potential nonresponse errors in this context. The authors found that many recruitment chains, which are important for the RDS process, ended or 'died' very early (in both studies, less than one coupon was redeemed per potential recruiter), which is contrary to a common assumption of exponential growth in the chains, and that coupon redemption rates (as proxies of response rates) were relatively low. Furthermore, respondents within the same recruitment chain tended to have very similar characteristics (or, put differently, within-chain correlations were quite high). In addition, the authors presented evidence of potential nonresponse bias, finding that recruiters with lower income and higher health risks generated more recruits, and that peers with stronger relationships were more likely to accept recruitment coupons. These findings suggested that the recruitment process is not in fact random, which is another common assumption underlying RDS.

In terms of measurement error, self-reported network sizes were found to be quite variable and in some cases unreasonable (in one of the studies, 5.9% of reports were zero, and one report was 2,100), suggesting possible measurement error in these reports (which are essential for estimation based on the RDS technique). These results were important because RDS assumes that there is a positive relationship between network size and the probability of being selected for recruitment, and widely varying network sizes can lead to weighting effects on estimates (both in terms of bias and variance). The authors demonstrated how both estimates of descriptive statistics and the variances of those estimates were strongly affected by the highly variable weights that resulted. The authors also considered the effects of the weights on estimates of bivariate associations in logistic regression models, and found that the extreme weights can result in unexplainable and unreasonable associations. These results spoke to the importance of trying to apply different weight smoothing approaches when using these types of estimators.

In conclusion, the authors emphasize the importance of assessing these errors and assessing the sensitivity of estimates to different weighting approaches in a given study before trying to make inferences about hard-to-reach populations. They discuss the potential advantages of follow-up interviews, and how careful design of these interviews should be considered. They also discuss possible techniques for incorporating the errors that they discovered in inferential procedures, introducing many possible avenues for future research.

This article presents a careful study of the errors that can arise in RDS, and confirms that similar types of survey errors occurred in two independent RDS studies of at-risk populations. As is the case with any empirical studies that carefully apply the TSE framework to a given type of survey design, the errors identified should help future researchers applying such designs to make better inferences and think carefully about how these types of RDS designs can be improved.